GPT or Large Language Models are slowly permeating our work lives. Besides the very popular Retrieve-Gen paradigm (e.g., chat with enterprise docs), an emerging enterprise use case I’ve seen is that of lightweight text data analysis using large language models.

For example, consider this sentence duplicate filtering task:

Given a list of sentences, filter out duplicates. In the output filtered list, keep exactly one of the duplicates. Ensure the filtered list covers the input list fully: no sentence is left out.

In practice, the task could be more complex: say, given two groups of sentences, find duplicates and output the matching indices. or, summarize each paragraph across articles into short sentences and then find duplicates. or, find common topics across snippets and label each snippets, and so on. However, let’s stick to the simpler sentence duplicate filtering task for this discussion.

The conventional approach to solve this task is to write code manually using the popular data analysis libraries in the Python ecosystem, but:

we may want to avoid implementing the tricky sentence duplicate check.

feeling lazy! can GPT do it for me, please?

don’t want to continue refining code as the spec becomes more complex

So, we call upon GPT to do this task for us. Now, here is the catch. GPT is highly capable and excels at nearly solving problems. However, it often falls short of being truly dependable in the final stretch. We will see some examples in a moment.

So, how do you get along with such a capable, but unreliable copilot?

Not an easy question to answer. To make some headway, let us explore a few scenarios of co-working with the GPT.

Strategy 1: Ask GPT to solve end-to-end

The overall Strategy:

setup the prompt with instructions

observe and verify the output on some data samples

if instructions not followed or not sufficient: iterate to improve the prompts

Here is a sample session with GPT that implements this strategy.

For the given list of sentences, remove all duplicates and output a filtered list. Do not write code. Only give me the final list.

"The sky is blue.",

"The grass is green.",

"The sky is blue.",

"The sun is shining.",

"The color of grass is green."

Output:

1. "The sky is blue."

2."The grass is green."

3. "The sun is shining."

4. "The color of grass is green."

Here 1 and 4 look like duplicates but are not filtered out. So, let us refine the prompt.

Assume sentences are duplicates if they mean roughly the same. Can you repeat the previous instruction?

Output

"The sky is blue."

"The grass is green."

"The sun is shining."

Great! we are done on the earlier task. But just realized we have an additional requirement: pick the longer duplicate sentence.

"The color of grass is green."

"The sun is shining."

"The sky is blue."

Good, now looks like we’re done. Well, maybe not.

We used ChatGPT for the above session. Cannot reproduce it with the GPT APIs. Either outputs don’t match or one of the prompts doesn’t work.

Works on the 4-element list sample but how do we ensure full coverage in general?

suppose our original list is 1000 sentences long. What if GPT drops sentences quietly from the original list?

how do we verify? write code to verify coverage?

or, maybe refine prompt further to verify coverage? but who knows, yet another issue lurking beneath?

This is quite frustrating - we are almost there but can’t trust GPT to get past the finish line. At the same time, we are unable to quickly verify if an output list covers the input list fully. We’ve ended up with an unreliable word program which is hard to fix.

Tired of prompt refining, we abandon this end-to-end strategy and switch to a more micro-managed one. When in trouble, regain control!

Strategy 2: Ask GPT to write code

Python code is a more trusted intermediary between us and GPT. We don’t want to write it ourselves — thankfully, GPT can write it for us. So, here’s the prompt:

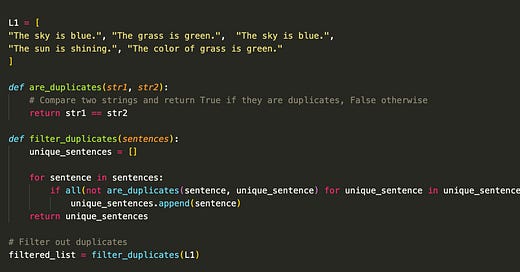

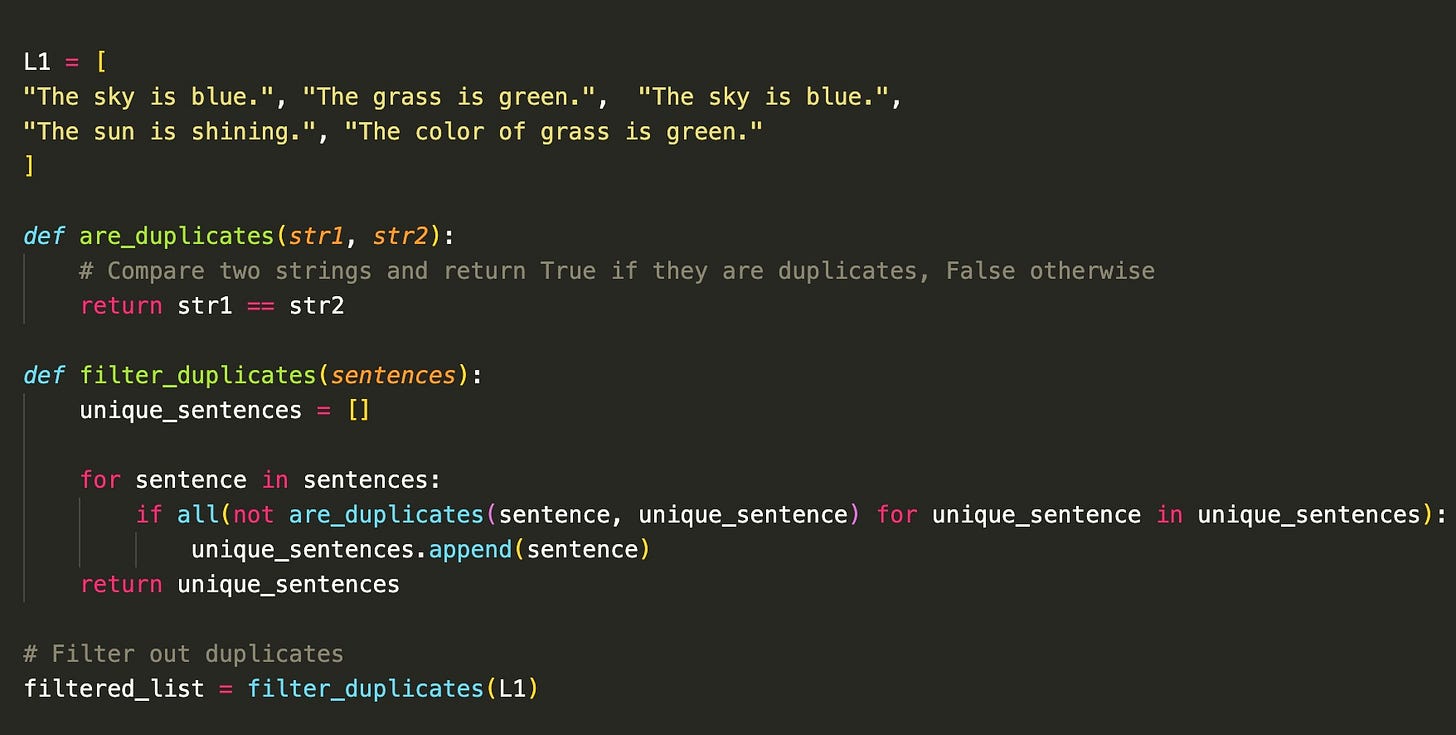

Write python code to filter out duplicates from a list L1 of sentences. Use a separate function to check if two strings are duplicate.

Output:

Looks OK, but there is a problem: the function are_duplicates is naive because it looks for an exact string match. It will fail for duplicate pairs “The grass is green”, “The color of grass is green”. We need a way to compare sentences semantically.

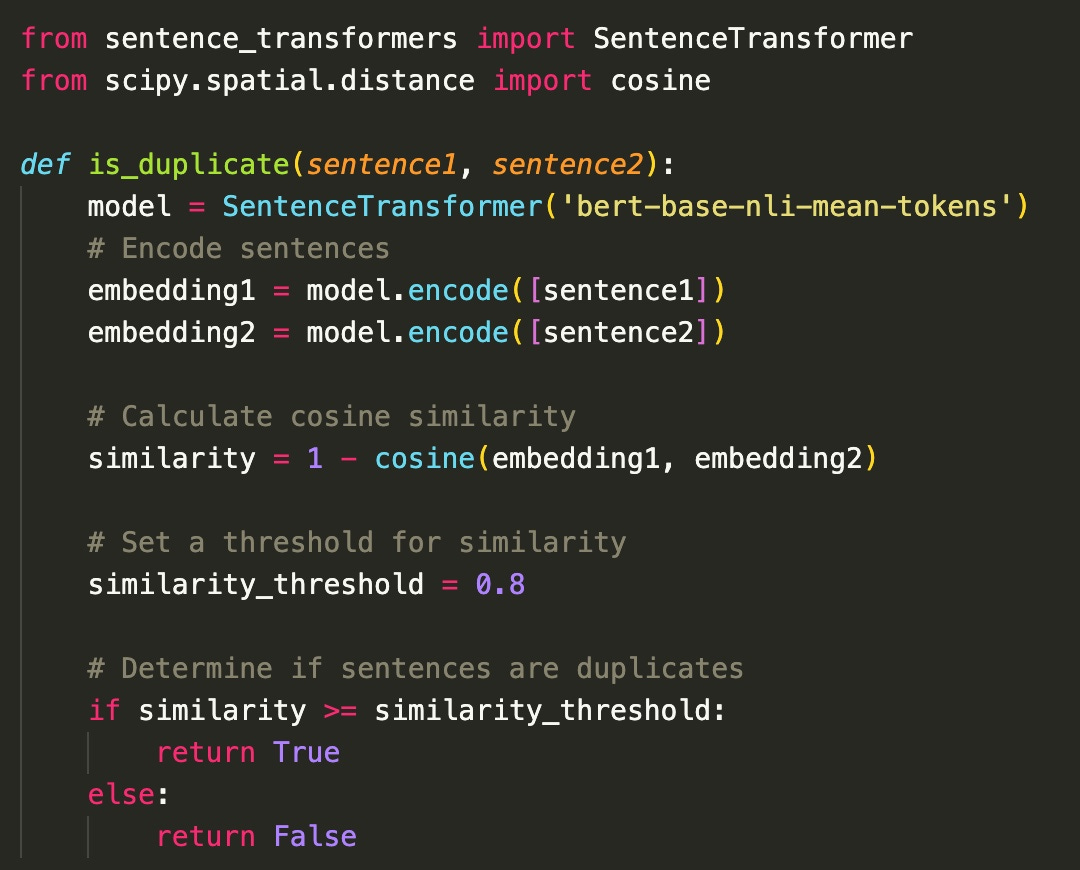

Comparing sentences semantically isn’t a traditional Leetcode algorithmic task. However, with off-the-shelf neural encoders available, it is easy to implement are_duplicates() in a few lines of code.

Write an is_duplicate function which calls the sentence encoders to embed and compare two sentences.

A sigh of relief! We have a complete solution now (with minor refactoring), which looks quite deterministic and reliable.

Plus, we’ve saved time by avoiding to write that tricky nested loop and reading sentence encoder documentation.

The downside, however, is that now we’ve to maintain this code (instead of natural language instructions in Strategy 1, decide upon hyper-params like similarity_threshold, update it with changing specifications etc.

So which one do you pick — Strategy 1 vs 2?

Yielding vs Retaining control

Let us take a step back and analyze our strategies with GPT so far.

In the first strategy we trust GPT, rely on it to follow our instructions carefully. We carefully craft a prompt, prompt GPT and trust its results!

By trusting, we give away control, with the belief that GPT is a reliable assistant. We know that’s not true but would definitely like to believe it is one. It is a bet — if it works, nothing sweeter than that!

In the second strategy, we trust GPT less. We trust it to write code almost correctly for this simple task. Of course, the code is short, so we can de-risk ourselves by quickly verifying the code and then conveniently withdrawing that meagre token of trust too.

The two strategies reflect the tradeoff that is at the heart of working with GPT:

When do we retain control vs give it away?

The Trust Tug-of-War

When starting to work on a task, users often start with delegating end-to-end to GPT optimistically (Strategy 1). This might be surprising to some but I’ve seen quite a few business clients do that.

Write elaborate prompts for GPT to perform text data wrangling tasks or more general text generation tasks

Stuck in a debugging nightmare forever, refining prompts, testing different LLMs etc, worrying about costs.

While small test samples often go through, when you shove in the real big data, debugging becomes a nightmare.

Verifying the results manually is very time consuming.

Iterating to correct the prompt and retrying is second-degree frustrating.

Because you are almost there, it is hard to relinquish your trust in GPT and go back to say Strategy 2, or even more primordial methods.

What starts off as a heavy duty belief in GPT ends up with frustration and severely diminished trust. We want to recoup whatever effort / time we’ve lost before withdrawing back to more familiar techniques.

By calling upon GPT to do a task for us, we enter into a trust tug-of-war with it, where we must constantly decide whether to yield or retain control.

Roll back your Trust Incrementally?

Although we only considered a simple task, this tug-of-war is the norm when co-piloting tasks with GPT. We believe that we can prompt-engineer our way to a winning strategy in this to-and-fro game, but can never be sure until we execute to the finish.

That leaves us with a final set of questions:

When do you know that it is time to rollback your GPT-trust for this task?

How far do you rollback? Do you dump GPT fully and go back to traditional structured tools?

or, maybe split down the task into many subtasks and take over control for only a few of them?

which sub-tasks are more amenable to GPT?

Can we predict ahead-of-time which (hybrid?) strategy will solve the task reliably, with least time and effort?

Although GPT and Large Language Models are improving continuously, I don’t see this tug-of-war going away. Knowing when to delegate to GPT or not, is going to be crucial.