Prompts are Programs

Just far more accessible to everyone than "older" programs.

From automating everyday tasks to powering complex simulations, computer programs are the invisible backbone of our digital world. These meticulously crafted sets of instructions, written in formal structured languages, tell computers precisely what to do. Effective programs are built on core principles: modularity, breaking down complex tasks into manageable components; programs generating values or programs themselves; composability and reusability. Rigorous testing, from focused unit tests to comprehensive system tests, ensures software quality and reliability.

In the recent years, the Large Language Models (LLMs) have introduced a paradigm shift in human-machine interaction, enabling us to communicate with machines using natural language, allowing us to issue instructions and accomplish tasks through simple, intuitive prompts.

In this article, I will illustrate how these prompts for LLMs exhibit analogous characteristics to programs. Well-designed prompts are not mere requests, but rather a new form of programming, mirroring the structure and power of traditional code.

Why is it useful to think of Prompts as Programs?

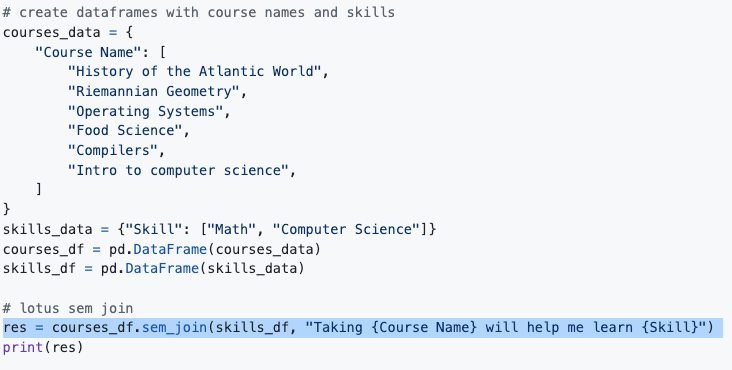

Before we delve deeper, let me quickly clear the air on why this line of thought makes sense at all (not yet another intellectual overdrive). Consider this snippet from the upcoming Lotus library.

This snippet performs a table join across courses and skill dataframes, but slightly generalises the traditional join operator. It introduces a new semantic join (sem_join) operator which works as follows.

A Semantic Join correlates values from courses and skill columns

only when they match/satisfy the following natural language prompt:

Taking {Course Name} will help me learn {Skill}.

The syntax for sem_join is quite intuitive, the placeholders in the prompt are to be replaced by actual values from the respective dataframe columns. However, the underlying precise semantics isn’t obvious. Take a moment to think how/why this operator works.

One way to gain semantic clarity is to view the natural language prompt as a filter function, which returns True only for pairs (course, skill), for which the natural language prompt holds. How do we evaluate this filter function-prompt? An LLM interprets and evaluates the prompt to True / False values. Given these (course, skill) pairs, we can now apply the traditional table-join to obtain the desired result.

As you write larger programs (say, Agents) which compose prompts with structured elements, it gets harder to maintain a clear semantic model for what is going on. Thinking of prompts as functions (with arguments if needed), enables us to clearly understand what the mixed program is doing.

Let's now dive in and see systematically how prompts correlate with programs.

Prompts return values 💰

This is business as usual. Prompt an LLM to get a text output (value).

In other words,

a prompt acts as a function f, takes in data inputs, output data.

For text-only scenario:f: text [, more text] -> text.

when interpreted with an LLM engine, a prompt function outputs text/multimodal values.

Compositionality. ⛓️

f: text [, more text] -> text.

Prompt (functions) take input of text datatype and output text. So it is easy to chain them. Output of first prompt (function) feeds into input of second prompt (function).

Recall Langchain's pipe ("|") operator.

Prompts are programs and so composition of prompts are also programs. Some use the term Agentic programs.

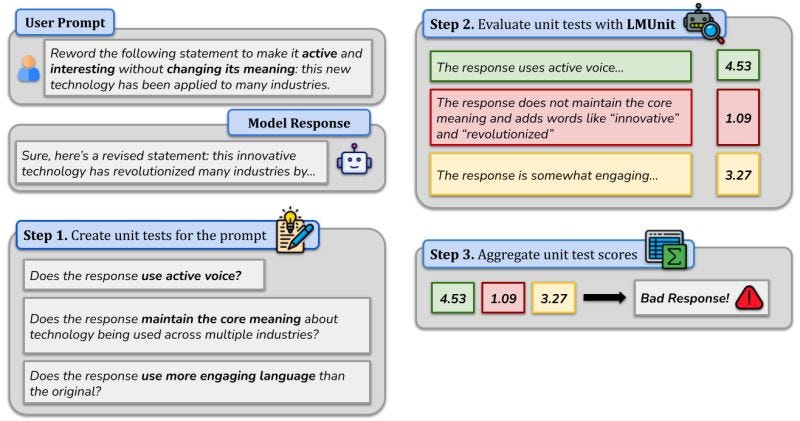

Unit tests for Prompts

A good programming practice is to write unit tests for functions. Why? Ensure quality and reliability of code, verifying that individual components of your software function as expected. Catch bugs early, facilitate refactoring, and more.

Similarly, we need unit tests for prompts -- evaluate correctness of local prompt programs, before composing them into more complex programs.

For example, see LMunit from Contextual AI (link) — they enable writing statements and questions in natural language that verify desirable qualities of LLM responses:

Does the response’s tone match the query’s?

Does the response accurately respond to the query?

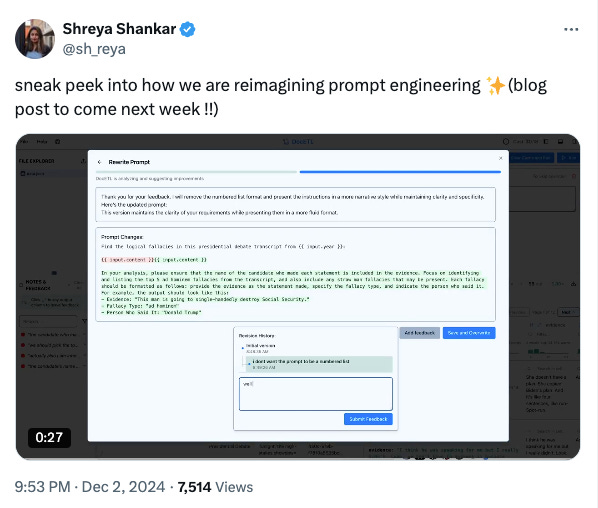

Higher Order Prompts

One can write a prompt which creates another prompt, which creates another …

Programs which create programs — functions which return functions — are considered higher order programs. For example, decorators in Python, closures, factory functions and so on. Intuitively, they allow modeling more complex patterns.

Higher order prompts are quite pervasive and act as prompt transformers or augmentors. For example, you can prompt ChatGPT to, in turn, create a prompt for a specific task.

Here is a practical example in context of data analysis in the DocETL framework. The flow is as follows:

Create an initial prompt

View the output generated by the prompt (much like a function returns a value)

Provide feedback/supervision to improve the prompt

Create a revised prompt

Who creates the revised prompt? A behind-the-scenes higher-order prompt.

Prompt Augmentations

Many frameworks utilize higher-order prompts for improving lower-order prompts.

DSPy, TextGrad

DSPy, GPTSwarm optimize a prompt template for each node

context: the input-output pairs of each node that were observed during predictions.

TextGrad, OptoPrime assign feedback to each component in a prompt network, much like backpropagation on numeric vectors

More formally, here is the prompt augmentation flow.

Given a task prompt, LLM provides an unsatisfactory output

We want to improve the task prompt, so that LLM provides a more "correct" output

In general, find me a prompt augmenter function that transforms a prompt to perform better on an objective (from Kambhampati's post)

Interestingly, the RAG paradigm is an instance of Prompt Augmentation, a higher-order function that adds context to original prompt to make it a better prompt.

Reusing Prompts ♻️

This is particularly interesting and important.

Imagine you are working on a new task, which is very similar to one of the older tasks you've implemented. If you can't reuse the older function for the new task, you will keep writing (variants of the) same stuff over and over again. That sucks! Coding won't scale to complex tasks.

Being able to reuse old, similar prompts is important. Structured languages (Python) provide reusable primitives -- functions and modules. But prompts are written in plain English. Writing English in a reusable manner is pretty hard! One of the reasons why we have a hard time writing programs which use LLMs.

Thankfully, this isn't the first time we’ve encountered this problem. There is an abundance of templating languages developed for web development that allow writing templates with holes -- these holes can be later filled in with numeric values or text later on.

For example, Jinja templating language include multiple reuse mechanisms:

Named Blocks create the main structure of your template, giving each section a specific name.

Define Macros for reusable components or sub-templates that can be called multiple times.

Use an Inline Include Pattern for content you want to "include" within the same file.

/* include sub-templates or files */

{% set sub_template =

"""This is the included template content."""

%}

{{ sub_template }} /* an example of inline include */

/* define macros */

{% macro greet(name) %} <p>Hello, {{ name }}!</p> {% endmacro %}

{{ greet("Alice") }}

/* define named blocks and override them later on */

{% block child_template %}

Can override this block later on

{% endblock child_template %}

A templating language like Jinja enables decoupling different pieces of information when writing a prompt program, adding or composing or overriding information on-demand.

Think of them as enabling function currying or partial functions -- adding parameter values on demand.

There are also Devops languages like Ansible, which allow us to decouple data (YAML) from text templates and composing them on demand.

$ template.yaml

name: "{{ inventory_hostname }}"

roles:

{% for role in roles %}

- {{ role }}-role

{% endfor %}

$ data.yaml

inventory_hostname: "my_host"

roles:

- web

- db

- cache

$ rendered output

name: "my_host"

roles:

- web-role

- db-role

- cache-role

Template based Prompts: more examples

GPT-pilot uses Jinja templating extensively, prompt parts stored in a complex file hierarchy, loaded on demand to create the final prompt for the LLM. See also prompt design at character.ai.

Versioning, Execution Tracing, Debugging

LLMs aren't deterministic interpreters — they may yield different outputs for the same input. So, humans spend a lot of time trying different prompt variations to generate desired, somewhat-deterministic, outputs.

How do we store and efficiently navigate through different versions?

How do debug prompt programs, which are created on-the-fly at the execution time?

Many observability platforms address these use cases, e.g., Langfuse, LangSmith, Arize, PromptLayer, W&B and so on.

Going Further …

If Prompts are Programs, then what are the right dev environment/tools for them?

What is the best way to bridge between structured and prompt programs? for example, turn prompt outputs into structured form. (outlines, guidance, LMQL) or Tool interfaces.

How to ensure a prompt program delivers similar output on different LLM interpreters?

Find an existing similar prompt to a new one and refactor for maximum reuse?

more …

These questions are being addressed actively by both research and builder communities.

Wrap Up

Prompts mirror Programs. Working with prompts effectively involves solving many problems similar to those with traditional structured programs/software

how to compose, how to reuse programs

how to write programs which generate programs

how to unit test

how to trace executions, store and navigate different versions

Of course, it is useful to keep the fundamental differences in mind:

Unlike structured languages, prompt programs are written in English and lack obvious structure. Therefore, we need to develop (and community has been hard at work) new tools that help humans work with unstructured prompt programs better.

Structured programs generally produce deterministic output (the same input always results in the same output), whereas LLMs can generate different outputs for the same prompt due to their probabilistic nature.

Benefits of thinking of Prompts as Programs?

Tap into on your extensive expertise with building traditional, structured software to build robust, reliable software with prompts

Keep yourself sane by having "everything is a function" mental model, even when navigating the world of unpredictable LLMs

Combine prompt functions and structured functions seamlessly

We will see LLMs used for prompt transformation and test-time search in future. Thinking of prompts as programs helps create better mental models for this.

From ARC prize o3 article: o3 fixes the fundamental limitation of the LLM paradigm – the inability to recombine knowledge at test time – and it does so via a form of LLM-guided natural language program search. This is not just incremental progress; it is new territory, and it demands serious scientific attention.

Finally, note that prompt programs (unlike traditional programs) are fully accessible to non-technical users. Everybody can program now! You no longer need to learn to think and speak in a new structured language.

In the new world of prompt programming, the timeless truth remains: programming expertise is not a fixed trait, but a skill that can be developed. It takes practice and dedication to develop skills for prompt programming.

Interestingly, going up the learning curve, becoming a better programmer, now mainly involves learning to improve how you organize and manage your thoughts and communicating them effectively.

Others have also observed how Prompts and Programs are similar. The phrase is part of the title an ACM article as well as an arxiv paper.

The phrase "Prompts are programs" seems to elicit intense reactions from social media. See discussions in the HN thread. I believe the community will come around to see and accept the similarities.

If you’ve reached this far, you may also like my other articles on structured abstractions for prompt programming.